Meta AI Researchers Introduce Mixture-of-Transformers (MoT): A Sparse Multi-Modal Transformer Architecture that Significantly Reduces Pretraining Computational Costs

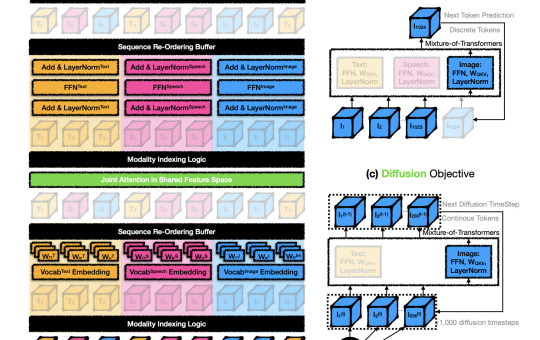

Advancements in AI have paved the way for multi-modal foundation models that simultaneously process text, images, and speech under a...