VectorSearch: A Comprehensive Solution to Document Retrieval Challenges with Hybrid Indexing, Multi-Vector Search, and Optimized Query Performance

The field of information retrieval has rapidly evolved due to the exponential growth of digital data. With the increasing volume of unstructured data, efficient methods for searching and retrieving relevant information have become more crucial than ever. Traditional keyword-based search techniques often need to capture the nuanced meaning of text, leading to inaccurate or irrelevant search results. This issue becomes more pronounced with complex datasets that span various media types, such as text, images, and videos. The widespread adoption of smart devices and social platforms has further contributed to this surge in data, with estimates suggesting that unstructured data could constitute 80% of the total data volume by 2025. As such, there is a critical need for robust methodologies that can transform this data into meaningful insights.

One of the main challenges in information retrieval is dealing with the high dimensionality and dynamic nature of modern datasets. Existing techniques often need help to provide scalable and efficient solutions for handling multi-vector queries or integrating real-time updates. This is particularly problematic for applications requiring rapid retrieval of contextually relevant results, such as recommender systems and large-scale search engines. While some progress has been made in enhancing retrieval mechanisms through latent semantic analysis (LSA) and deep learning models, these methods still need to address the semantic gaps between queries and documents.

Current information retrieval systems, like Milvus, have attempted to offer support for large-scale vector data management. However, these systems are hindered by their reliance on static datasets and a lack of flexibility in handling complex multi-vector queries. Traditional algorithms and libraries often depend heavily on main memory storage and cannot distribute data across multiple machines, limiting their scalability. This restricts their adaptability to real-world scenarios where data is constantly changing. As a result, existing solutions struggle to provide the precision and efficiency required for dynamic environments.

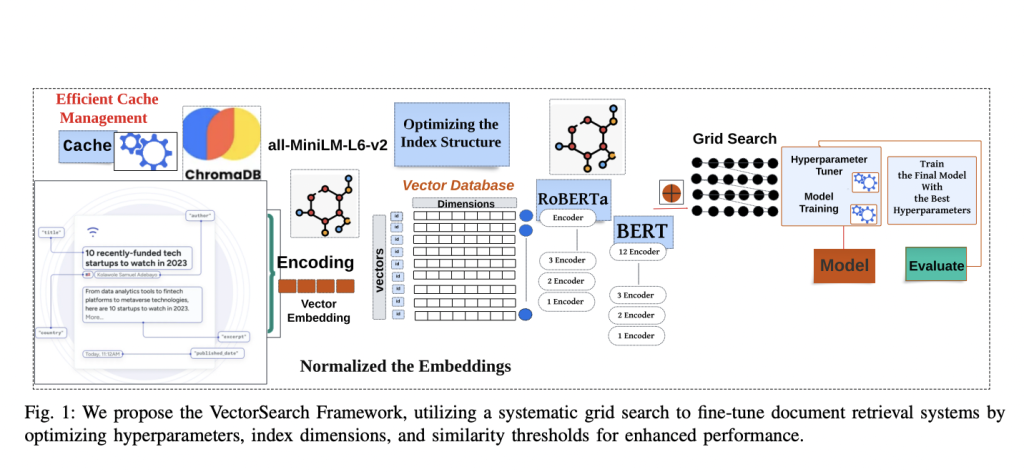

The research team at the University of Washington introduced VectorSearch, a novel document retrieval framework designed to address these limitations. VectorSearch integrates advanced language models, hybrid indexing techniques, and multi-vector query handling mechanisms to improve retrieval precision and scalability significantly. By leveraging both vector embeddings and traditional indexing methods, VectorSearch can efficiently manage large-scale datasets, making it a powerful tool for complex search operations. The framework incorporates cache mechanisms and optimized search algorithms, enhancing response times and overall performance. These capabilities set it apart from conventional systems, offering a comprehensive solution for document retrieval.

VectorSearch operates as a hybrid system that combines the strengths of multiple indexing techniques, such as FAISS for distributed indexing and HNSWlib for hierarchical search optimization. This approach enables the seamless management of large-scale datasets across multiple machines. Also, it introduces novel algorithms for multi-vector search, encoding documents into high-dimensional embeddings that capture the semantic relationships between different pieces of data. Integrating these embeddings into a vector database allows the system to retrieve relevant documents based on user queries efficiently. Experiments on real-world datasets demonstrate that VectorSearch outperforms existing systems, with a recall rate of 76.62% and a precision rate of 98.68% at an index dimension of 1024.

The performance evaluation of VectorSearch revealed significant improvements across various metrics. The system achieved an average query time of 0.47 seconds when using the BERT-base-uncased model and the FAISS indexing technique, which is considerably faster than traditional retrieval systems. This reduction in query time is attributed to the innovative use of hierarchical indexing and multi-vector query handling. Moreover, the proposed framework supports real-time updates, enabling it to handle dynamically evolving datasets without extensive re-indexing. These enhancements make VectorSearch a versatile solution for applications ranging from web search engines to recommendation systems.

Key takeaways from the research include:

High Precision and Recall: VectorSearch achieved a recall rate of 76.62% and a precision rate of 98.68% when using an index dimension of 1024, outperforming baseline models in various retrieval tasks.

Reduced Query Time: The system significantly reduced query time, achieving an average of 0.47 seconds for high-dimensional data retrieval.

Scalability: By integrating FAISS and HNSWlib, VectorSearch efficiently handles large-scale and evolving datasets, making it suitable for real-time applications.

Support for Dynamic Data: The framework supports real-time updates, enabling it to maintain high performance even as data changes.

In conclusion, VectorSearch presents a robust solution to the challenges faced by existing information retrieval systems. By introducing a scalable and adaptable approach, the research team has created a framework that meets the demands of modern data-intensive applications. The integration of hybrid indexing techniques, multi-vector search operations, and advanced language models results in a significant enhancement in retrieval accuracy and efficiency. This research paves the way for future advancements in the field, offering valuable insights into the development of next-generation document retrieval systems.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit.

We are inviting startups, companies, and research institutions who are working on small language models to participate in this upcoming ‘Small Language Models’ Magazine/Report by Marketchpost.com. This Magazine/Report will be released in late October/early November 2024. Click here to set up a call!

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.