Multi-Scale Geometric Analysis of Language Model Features: From Atomic Patterns to Galaxy Structures

Large Language Models (LLMs) have emerged as powerful tools in natural language processing, yet understanding their internal representations remains a significant challenge. Recent breakthroughs using sparse autoencoders have revealed interpretable “features” or concepts within the models’ activation space. While these discovered feature point clouds are now publicly accessible, comprehending their complex structural organization across different scales presents a crucial research problem. The analysis of these structures involves multiple challenges: identifying geometric patterns at the atomic level, understanding functional modularity at the intermediate scale, and examining the overall distribution of features at the larger scale. Traditional approaches have struggled to provide a comprehensive understanding of how these different scales interact and contribute to the model’s behaviour, making it essential to develop new methodologies for analyzing these multi-scale structures.

Previous methodological attempts to understand LLM feature structures have followed several distinct approaches, each with its limitations. Sparse autoencoders (SAE) emerged as an unsupervised method for discovering interpretable features, initially revealing neighbourhood-based groupings of related features through UMAP projections. Early word embedding methods like GloVe and Word2vec discovered linear relationships between semantic concepts, demonstrating basic geometric patterns such as analogical relationships. While these approaches provided valuable insights, they were limited by their focus on single-scale analysis. Meta-SAE techniques attempted to decompose features into more atomic components, suggesting a hierarchical structure, but struggled to capture the full complexity of multi-scale interactions. Function vector analysis in sequence models revealed linear representations of various concepts, from game positions to numerical quantities, but these methods typically focused on specific domains rather than providing a comprehensive understanding of the feature space’s geometric structure across different scales.

Researchers from the Massachusetts Institute of Technology propose a robust methodology to analyze geometric structures in SAE feature spaces through the concept of “crystal structures” – patterns that reflect semantic relationships between concepts. This methodology extends beyond simple parallelogram relationships (like man:woman::king: queen) to include trapezoid formations, which represent single-function vector relationships such as country-to-capital mappings. Initial investigations revealed that these geometric patterns are often obscured by “distractor features” – semantically irrelevant dimensions like word length that distort the expected geometric relationships. To address this challenge, the study introduces a refined methodology using Linear Discriminant Analysis (LDA) to project the data onto a lower-dimensional subspace, effectively filtering out these distractor features. This approach allows for clearer identification of meaningful geometric patterns by focusing on signal-to-noise eigenmodes, where signal represents inter-cluster variation and noise represents intra-cluster variation.

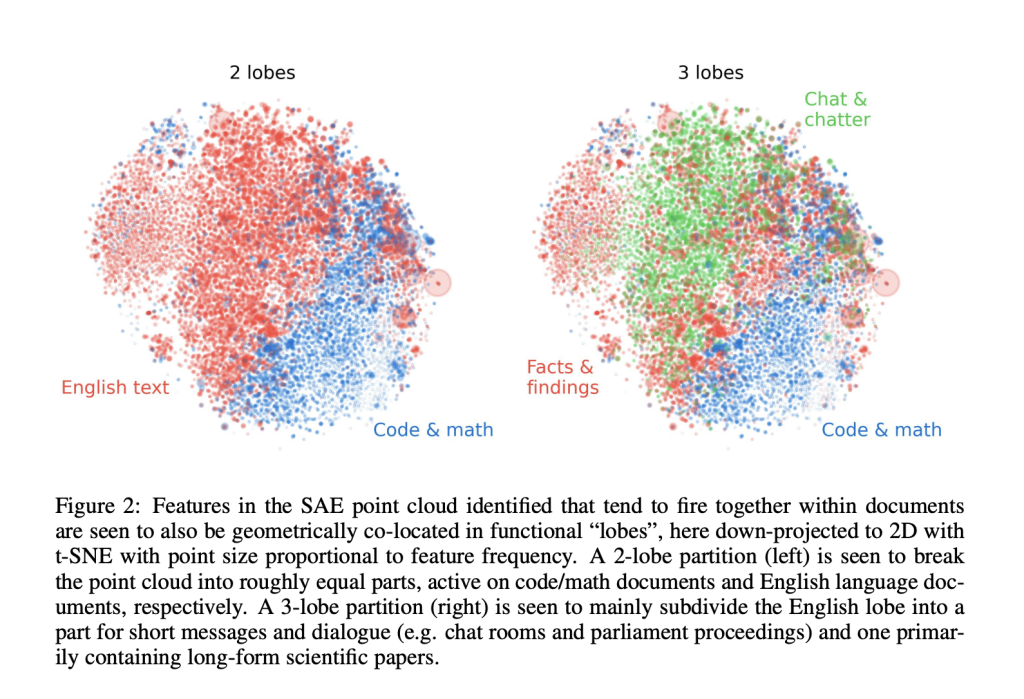

The methodology expands into analyzing larger-scale structures by investigating functional modularity within the SAE feature space, similar to specialized regions in biological brains. The approach identifies functional “lobes” through a systematic process of analyzing feature co-occurrences in document processing. Using a layer 12 residual stream SAE with 16,000 features, the study processes documents from The Pile dataset, considering features as “firing” when their hidden activation exceeds 1 and recording co-occurrences within 256-token blocks. The analysis employs various affinity metrics (simple matching coefficient, Jaccard similarity, Dice coefficient, overlap coefficient, and Phi coefficient) to measure feature relationships, followed by spectral clustering. To validate the spatial modularity hypothesis, the research implements two quantitative approaches: comparing mutual information between geometry-based and co-occurrence-based clustering results and training logistic regression models to predict functional lobes from geometric positions. This comprehensive methodology aims to establish whether functionally related features exhibit spatial clustering in the activation space.

The large-scale “galaxy” structure analysis of the SAE feature point cloud reveals distinct patterns that deviate from a simple isotropic Gaussian distribution. Examining the first three principal components demonstrates that the point cloud exhibits asymmetric shapes, with varying widths along different principal axes. This structure bears a resemblance to biological neural organizations, particularly the human brain’s asymmetric formation. These findings suggest that the feature space maintains organized, non-random distributions even at the largest scale of analysis.

The multi-scale analysis of SAE feature point clouds reveals three distinct levels of structural organization. At the atomic level, geometric patterns emerge in the form of parallelograms and trapezoids representing semantic relationships, particularly when distractor features are removed. The intermediate level demonstrates functional modularity similar to biological neural systems, with specialized regions for specific tasks like mathematics and coding. The galaxy-scale structure exhibits non-isotropic distribution with a characteristic power law of eigenvalues, most pronounced in the middle layers. These findings significantly advance the understanding of how language models organize and represent information across different scales.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Trending] LLMWare Introduces Model Depot: An Extensive Collection of Small Language Models (SLMs) for Intel PCs

Asjad is an intern consultant at Marktechpost. He is persuing B.Tech in mechanical engineering at the Indian Institute of Technology, Kharagpur. Asjad is a Machine learning and deep learning enthusiast who is always researching the applications of machine learning in healthcare.