MathGAP: An Evaluation Benchmark for LLMs’ Mathematical Reasoning Using Controlled Proof Depth, Width, and Complexity for Out-of-Distribution Tasks

[ad_1]

Machine learning has considerably improved in evaluating large language models (LLMs) for their mathematical reasoning abilities, especially in handling complex arithmetic and deductive reasoning tasks. The field focuses on testing LLMs’ capacity to generalize and solve new types of problems, especially as arithmetic problems increase in complexity. Evaluations that explore reasoning capabilities in LLMs use benchmarks, such as mathematical word problems, to measure whether these models can apply learned patterns to novel situations. This research trajectory is essential to gauge an LLM’s problem-solving abilities and limits in comprehending and solving complex arithmetic tasks in unfamiliar contexts.

One central challenge with evaluating reasoning in LLMs is avoiding issues where models may have encountered similar data during training, known as data contamination. This problem is especially prevalent in arithmetic reasoning datasets, which often need more structural diversity, limiting their utility in fully testing a model’s generalization ability. Also, most existing evaluations focus on relatively straightforward proofs, which do not challenge LLMs in applying complex problem-solving strategies. Researchers increasingly emphasize the need for new evaluation frameworks that capture varying levels of proof complexity and distinct logical pathways to allow more accurate insights into LLMs’ reasoning abilities.

Methods for testing reasoning capabilities include datasets like GSM8k, which contains arithmetic word problems that test LLMs on basic to intermediate logic tasks. However, these benchmarks must be revised to push the limits of LLM reasoning, as they often contain repetitive patterns and need more variety in problem structures. Contamination in GSM8k, as researchers have noted, presents another issue; if a model has seen similar problems in its training, its performance in reasoning benchmarks cannot be considered a true measure of its generalization ability. This gap creates a pressing need for innovative evaluation frameworks that challenge LLMs by simulating real-world scenarios with greater complexity and variety in problem composition.

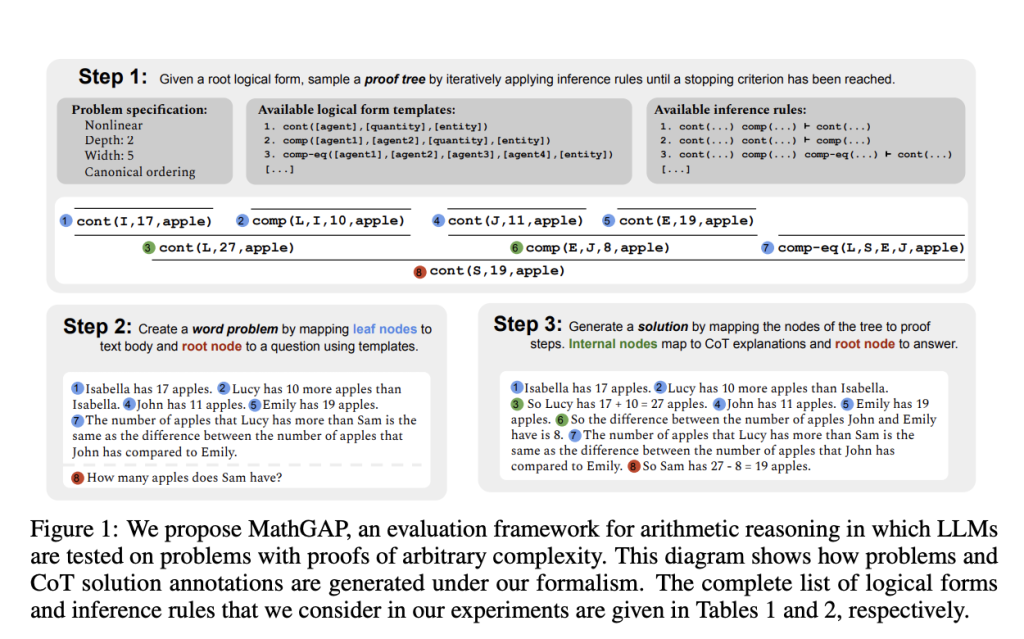

Researchers at ETH Zurich, Max Planck Institute for Intelligent Systems, Idiap Research Institute, and Purdue University have developed Mathematical Generalization on Arithmetic Proofs—MathGAP, a comprehensive framework for evaluating LLMs on problems with complex proof structures. MathGAP allows researchers to systematically test LLMs on math problems by controlling various parameters of problem complexity, such as proof depth, width, and tree structure, simulating real-world scenarios of increasing difficulty. The framework applies structured templates that help create non-repetitive, complex problems designed to be distinct from the data on which models were trained, thus avoiding data contamination. By adjusting problem parameters, MathGAP enables researchers to analyze how LLMs handle diverse reasoning tasks, effectively increasing the robustness of model evaluations.

MathGAP’s approach to problem generation involves using logical proof trees, representing problems as sequences of logical forms that must be traversed to find solutions. These proof trees range from simple linear to nonlinear models requiring more sophisticated reasoning. For instance, a linear proof tree may contain problems of depth six and width 5, while a nonlinear problem may increase the depth to 10 or more, challenging LLMs to maintain accuracy with complex, multi-step reasoning. The researchers include logical templates and inference rules within MathGAP, enabling the automatic generation of new problem instances. The resulting framework generates proof trees with varying depth, width, and complexity, such as nonlinear structures with depths of up to 6 and multiple logical steps, which researchers found particularly challenging for models, even state-of-the-art ones like GPT-4o.

Experiments with MathGAP reveal that as problem complexity increases, LLMs’ performance declines significantly, particularly when faced with nonlinear proof trees. For instance, accuracy rates drop consistently as proof depth and width increase, demonstrating that even leading models struggle with complex reasoning tasks. Zero-shot learning and in-context learning methods were tested, where models either received no prior examples or were provided simpler examples before the complex test problems. Interestingly, presenting LLMs with in-context examples did not always yield better results than zero-shot learning, especially in nonlinear proofs. For instance, in tests with linear depth problems up to level 10, performance was relatively high, but with nonlinear proofs, models like GPT-3.5 and Llama3-8B exhibited drastic accuracy declines.

The MathGAP framework’s results highlight how LLMs vary significantly in performance when provided with different in-context example distributions. A notable finding is that models typically perform better with a diverse set of examples that cover a range of complexities rather than repeated simple examples. Yet, even with carefully curated prompts, model performance does not consistently increase, underscoring the difficulty of handling complex, multi-step arithmetic tasks. Performance dropped to nearly zero for deeper nonlinear problems, where each model exhibited limitations in maintaining high accuracy as problems became more intricate.

Key takeaways from the research include:

Decreased Performance with Depth and Width: As proof depth reached levels between 6 and 10 in linear tasks, models demonstrated noticeable declines in performance. In contrast, nonlinear problems at depth 6 posed challenges even for the best-performing models.

Nonlinear Problems Pose Higher Challenges: The shift from linear to nonlinear proofs caused accuracy rates to drop rapidly, indicating that complex logical structures stretch current LLM capabilities.

Impact of In-Context Learning on Model Accuracy: In-context learning using simpler examples does not always improve performance on more complex problems, indicating that diverse, contextually varied prompts may benefit models more.

Sensitivity to Problem Order: Models performed best when proof steps followed a logical sequence, with deviations from canonical order introducing additional difficulty.

In conclusion, MathGAP is a novel and effective approach to assessing LLM reasoning in arithmetic problems of varied proof complexity, revealing critical insights into the strengths and weaknesses of current models. The framework highlights the challenges even the most advanced LLMs face in managing out-of-distribution problems with increasing complexity, underlining the importance of continued advancements in model generalization and problem-solving capabilities.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

Aswin AK is a consulting intern at MarkTechPost. He is pursuing his Dual Degree at the Indian Institute of Technology, Kharagpur. He is passionate about data science and machine learning, bringing a strong academic background and hands-on experience in solving real-life cross-domain challenges.

[ad_2]

Source link