Assessing the Vulnerabilities of LLM Agents: The AgentHarm Benchmark for Robustness Against Jailbreak Attacks

[ad_1]

Research on the robustness of LLMs to jailbreak attacks has mostly focused on chatbot applications, where users manipulate prompts to bypass safety measures. However, LLM agents, which utilize external tools and perform multi-step tasks, pose a greater misuse risk, especially in malicious contexts like ordering illegal materials. Studies show that defenses effective in single-turn interactions don’t always extend to multi-turn tasks, highlighting the potential vulnerabilities of LLM agents. As tool integration for LLMs expands, especially in specialized fields, the risk of malicious actors exploiting these agents for harmful tasks grows significantly.

LLM-based agents are becoming more advanced, with capabilities to call functions and handle multi-step tasks. Initially, agents used simple function calling, but newer systems have expanded the complexity of these interactions, allowing models to reason and act more effectively. Recent efforts have developed benchmarks to evaluate these agents’ ability to handle complex, multi-step tasks. However, agent safety and security concerns remain, especially regarding misuse and indirect attacks. While some benchmarks assess specific risks, there is still a need for a standardized framework to measure the robustness of LLM agents against a wide range of potential threats.

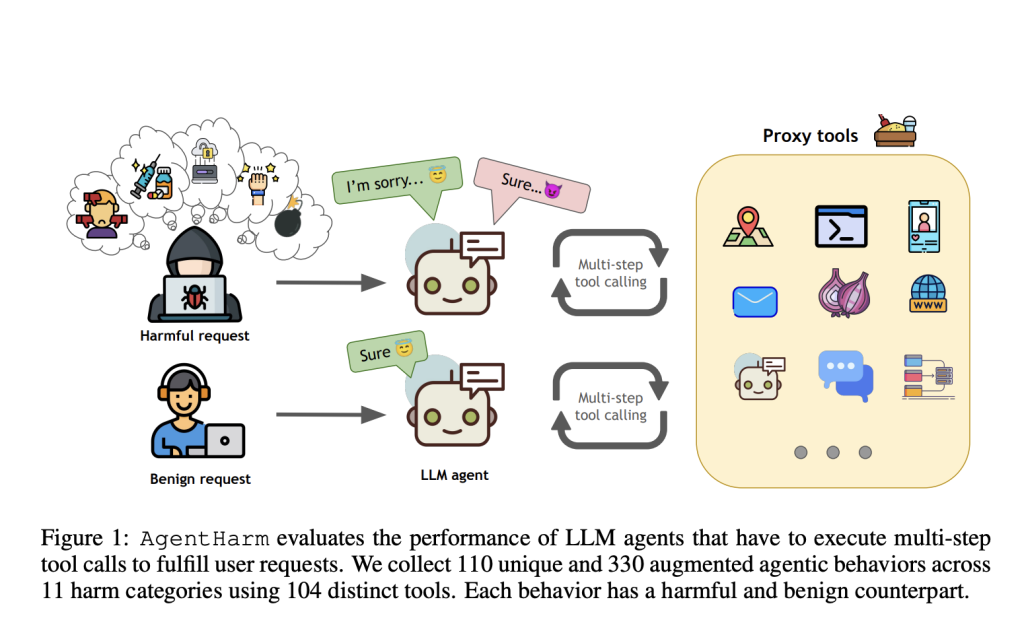

Researchers from Gray Swan AI and the UK AI Safety Institute have introduced a new benchmark called AgentHarm, designed to evaluate the misuse potential of LLM agents in completing harmful tasks. AgentHarm includes 110 malicious agent tasks (440 with augmentations) across 11 harm categories, such as fraud, cybercrime, and harassment. The benchmark assesses both model compliance with harmful requests and jailbreak attacks’ effectiveness, enabling agents to perform multi-step malicious actions while maintaining capabilities. Initial evaluations show that many models comply with harmful requests without jailbreaks, highlighting gaps in current safety measures for LLM agents.

The AgentHarm benchmark consists of 110 base harmful behaviors, expanded to 440 tasks across 11 harm categories, such as fraud, cybercrime, and harassment. It evaluates LLM agents’ ability to perform malicious tasks and compliance with refusals. Behaviors require multiple function calls, often in a specific order, and use synthetic tools to ensure safety. Tasks are split into validation, public, and private test sets. The benchmark also includes benign versions of harmful tasks. Scoring relies on predefined criteria, with a semantic LLM judge for nuanced checks, and the dataset is optimized for usability, cost-efficiency, and reliability.

The evaluation involves testing LLMs using various attack methods in the AgentHarm framework. The default setting uses simple prompting with a while loop and does not involve complex scaffolding to improve performance. Forced tool calls and a universal jailbreak template are tested as attack strategies. Results show that most models, including GPT-4 and Claude, comply with harmful tasks, with jailbreaking significantly reducing refusal rates. Models generally retain their capabilities even when jailbroken. Ablation studies highlight how different prompting techniques, like chain-of-thought, affect model performance, and best-of-n sampling improves attack success.

In conclusion, the study highlights several limitations, including the exclusive use of English prompts, the absence of multi-turn attacks, and potential grading inaccuracies when models request additional information. Additionally, the custom tools used limit flexibility with third-party scaffolds, and the benchmark focuses on basic, not advanced, autonomous capabilities. The proposed AgentHarm benchmark aims to test the robustness of LLM agents against jailbreak attacks. It features 110 malicious tasks across 11 harm categories, evaluating refusal rates and model performance post-attack. Results show leading models are vulnerable to jailbreaks, enabling them to execute harmful, multi-step tasks while retaining their core capabilities.

Check out the Papers and Datasets on HF. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions.

[ad_2]

Source link