Empowering Backbone Models for Visual Text Generation with Input Granularity Control and Glyph-Aware Training

[ad_1]

Generating accurate and aesthetically appealing visual texts in text-to-image generation models presents a significant challenge. While diffusion-based models have achieved success in creating diverse and high-quality images, they often struggle to produce legible and well-placed visual text. Common issues include misspellings, omitted words, and improper text alignment, particularly when generating non-English languages such as Chinese. These limitations restrict the applicability of such models in real-world use cases like digital media production and advertising, where precise visual text generation is essential.

Current methods for visual text generation typically embed text directly into the model’s latent space or impose positional constraints during image generation. However, these approaches come with limitations. Byte Pair Encoding (BPE), commonly used for tokenization in these models, breaks down words into subwords, complicating the generation of coherent and legible text. Moreover, the cross-attention mechanisms in these models are not fully optimized, resulting in weak alignment between the generated visual text and the input tokens. Solutions such as TextDiffuser and GlyphDraw attempt to solve these problems with rigid positional constraints or inpainting techniques, but this often leads to limited visual diversity and inconsistent text integration. Additionally, most current models only handle English text, leaving gaps in their ability to generate accurate texts in other languages, especially Chinese.

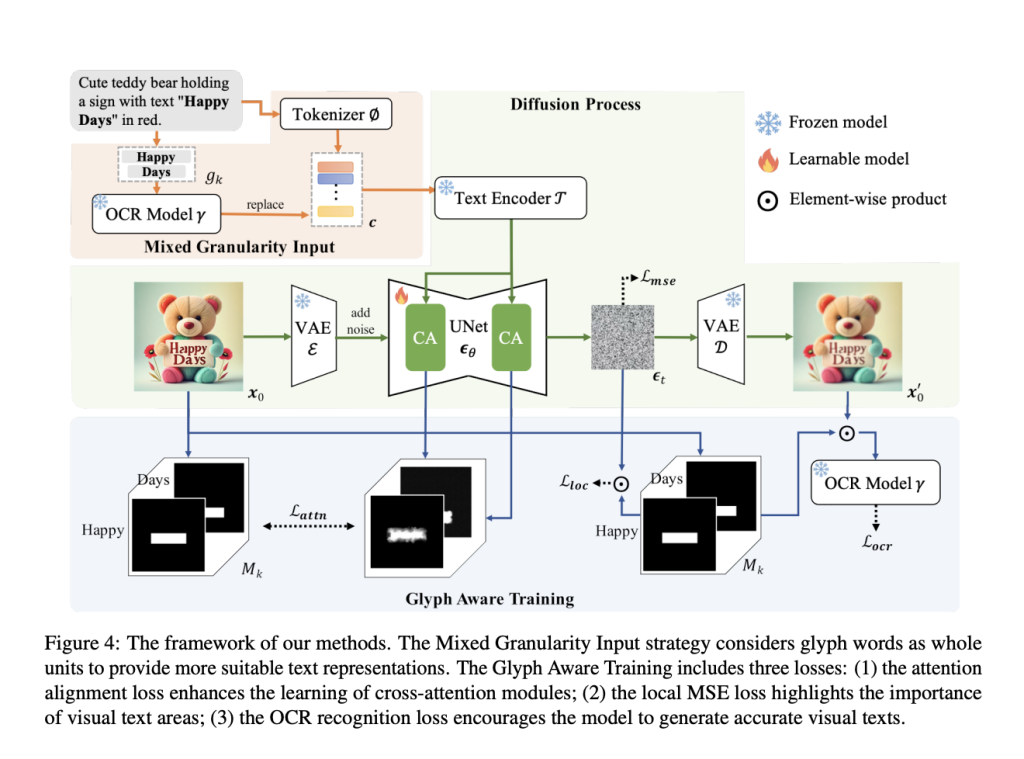

Researchers from Xiamen University, Baidu Inc., and Shanghai Artificial Intelligence Laboratory introduced two core innovations: input granularity control and glyph-aware training. The mixed granularity input strategy represents entire words instead of subwords, bypassing the challenges posed by BPE tokenization and allowing for more coherent text generation. Furthermore, a new training regime was introduced, incorporating three key losses: (1) attention alignment loss, which enhances the cross-attention mechanisms by improving text-to-token alignment; (2) local MSE loss, which ensures the model focuses on critical text regions within the image; and (3) OCR recognition loss, designed to drive accuracy in the generated text. These combined techniques improve both the visual and semantic aspects of text generation while maintaining the quality of image synthesis.

This approach utilizes a latent diffusion framework with three main components: a Variational Autoencoder (VAE) for encoding and decoding images, a UNet denoiser to manage the diffusion process, and a text encoder to handle input prompts. To counter the challenges posed by BPE tokenization, the researchers employed a mixed granularity input strategy, treating words as whole units rather than subwords. An OCR model is also integrated to extract glyph-level features, refining the text embeddings used by the model.

The model is trained using a dataset comprising 240,000 English samples and 50,000 Chinese samples, filtered to ensure high-quality images with clear and coherent visual text. Both SD-XL and SDXL-Turbo backbone models were utilized, with training conducted over 10,000 steps at a learning rate of 2e-5.

This solution shows significant improvements in both text generation accuracy and visual appeal. Precision, recall, and F1 scores for English and Chinese text generation notably surpass those of existing methods. For example, OCR precision reaches 0.360, outperforming other baseline models like SD-XL and LCM-LoRA. The method generates more legible, visually appealing text and integrates it more seamlessly into images. Additionally, the new glyph-aware training strategy enables multilingual support, with the model effectively handling Chinese text generation—an area where prior models fall short. These results highlight the model’s superior ability to produce accurate and aesthetically coherent visual text, while maintaining the overall quality of the generated images across different languages.

In conclusion, the method developed here advances the field of visual text generation by addressing critical challenges related to tokenization and cross-attention mechanisms. The introduction of input granularity control and glyph-aware training enables the generation of accurate, aesthetically pleasing text in both English and Chinese. These innovations enhance the practical applications of text-to-image models, particularly in areas requiring precise multilingual text generation.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit

[Upcoming Event- Oct 17 202] RetrieveX – The GenAI Data Retrieval Conference (Promoted)

Aswin AK is a consulting intern at MarkTechPost. He is pursuing his Dual Degree at the Indian Institute of Technology, Kharagpur. He is passionate about data science and machine learning, bringing a strong academic background and hands-on experience in solving real-life cross-domain challenges.

[ad_2]

Source link