Advanced Privacy-Preserving Federated Learning (APPFL): An AI Framework to Address Data Heterogeneity, Computational Disparities, and Security Challenges in Decentralized Machine Learning

[ad_1]

Federated learning (FL) is a powerful ML paradigm that enables multiple data owners to train models without centralizing their data collaboratively. This approach is particularly valuable in domains where data privacy is critical, such as healthcare, finance, and the energy sector. The core of federated learning lies in training models on decentralized data stored on each client’s device. However, this distributed nature poses significant challenges, including data heterogeneity, computation disparities across devices, and security risks, such as potential exposure of sensitive information through model updates. Despite these issues, federated learning represents a promising path forward for leveraging large, distributed datasets to build highly accurate models while maintaining user privacy.

A significant problem federated learning faces is the varying quality and distribution of data across client devices. In traditional machine learning, data is typically assumed to be uniformly distributed and independently collected. However, client data is often unbalanced and non-independent in a federated environment. For example, one device may contain vastly different data than another, leading to training objectives that differ across clients. This variation can result in suboptimal model performance when local updates are aggregated into a global model. The computational power of client devices varies widely, causing slower devices to delay training progress. These disparities make it difficult to synchronize the training process effectively, leading to inefficiencies and reduced model accuracy.

Previous approaches to addressing these issues have included frameworks like FedAvg, which aggregates client models at a central server by averaging their local updates. However, these methods have proven inadequate in dealing with data heterogeneity and computational variance. Asynchronous aggregation techniques, which allow faster devices to contribute updates without waiting for slower ones, have been introduced to mitigate delays. However, these methods tend to degrade model accuracy due to the imbalance in the frequency of contributions from different clients. Security measures like differential privacy and homomorphic encryption are often too computationally expensive or fail to fully prevent data leakage through model gradients, leaving sensitive information at risk.

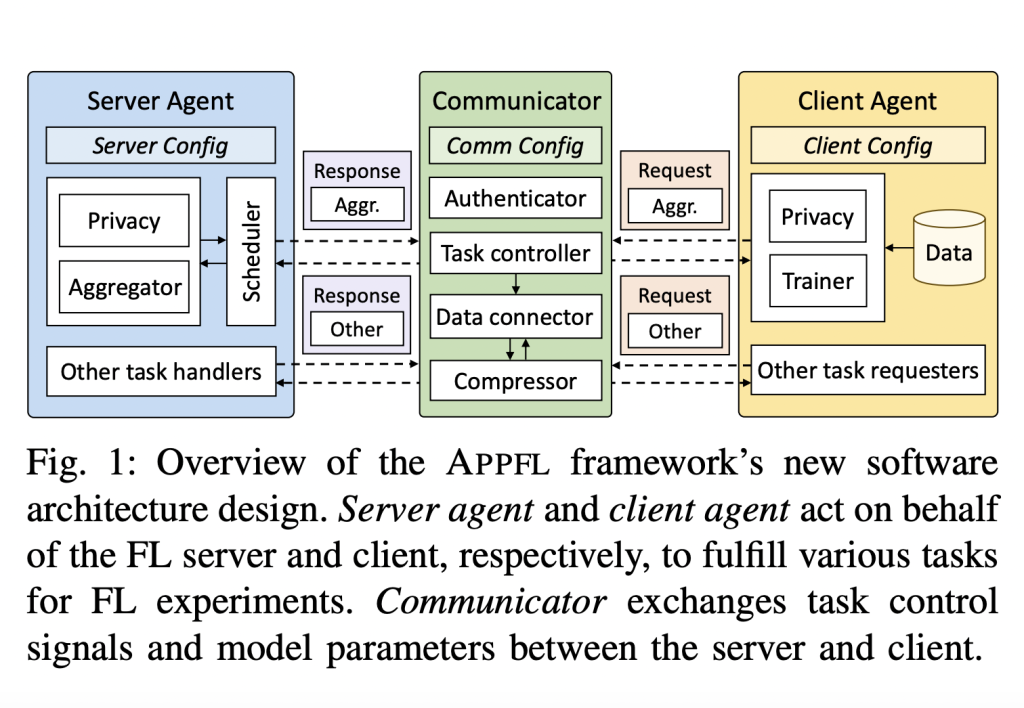

Researchers from Argonne National Laboratory, the University of Illinois, and Arizona State University have developed the Advanced Privacy-Preserving Federated Learning (APPFL) framework in response to these limitations. This new framework offers a comprehensive and flexible solution addressing current FL models’ technical and security challenges. APPFL improves federated learning systems’ efficiency, security, and scalability. It supports synchronous and asynchronous aggregation strategies, enabling it to adapt to various deployment scenarios. It includes robust privacy-preserving mechanisms to protect against data reconstruction attacks while allowing high-quality model training across distributed clients.

The core innovation in APPFL lies in its modular architecture, which allows developers to easily incorporate new algorithms and aggregation strategies tailored to specific needs. The framework integrates advanced aggregation techniques, including FedAsync and FedCompass, which synchronize model updates more effectively by dynamically adjusting the training process based on the computing power of each client. This approach reduces client drift, where faster devices disproportionately influence the global model, leading to more balanced and accurate model updates. APPFL also features efficient communication protocols and compression techniques, such as SZ2 and ZFP, which reduce the communication load during model updates by as much as 50%. These protocols ensure that even with limited bandwidth, federated learning processes can remain efficient without compromising performance.

The researchers performed extensive evaluations of APPFL’s performance in various real-world scenarios. In one experiment involving 128 clients, the framework reduced communication time by 40% compared to existing solutions while maintaining a model accuracy of over 90%. Moreover, its privacy-preserving strategies, including differential privacy and cryptographic techniques, successfully protected sensitive data from attacks without significantly impacting model accuracy. APPFL demonstrated a 30% reduction in training time in a large-scale healthcare dataset while preserving patient data privacy, making it a viable solution for privacy-sensitive environments. Another test in financial services showed that APPFL’s adaptive aggregation strategies led to more accurate predictions of loan default risks compared to traditional methods despite the heterogeneity in the data across different financial institutions.

The performance results also highlight APPFL’s ability to handle large models efficiently. For example, when training a Vision Transformer model with 88 million parameters, APPFL achieved a communication time reduction of 15% per epoch. This reduction is critical in scenarios where timely model updates are necessary, such as electric grid management or real-time medical diagnostics. The framework also performed well in vertical federated learning setups, where different clients hold distinct features of the same dataset, demonstrating its versatility across various federated learning paradigms.

In conclusion, APPFL is a significant advancement in federated learning, addressing the core challenges of data heterogeneity, computational disparity, and security. By providing an extensible framework that integrates advanced aggregation strategies and privacy-preserving technologies, APPFL improves the efficiency and accuracy of federated learning models. Its ability to reduce communication times by up to 40% and training times by 30% while maintaining high levels of privacy and model accuracy positions it as a leading solution for decentralized machine learning. The framework’s adaptability across different federated learning scenarios, from healthcare to finance, ensures that it will play a crucial role in the future of secure, distributed AI.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

⏩ ⏩ FREE AI WEBINAR: ‘SAM 2 for Video: How to Fine-tune On Your Data’ (Wed, Sep 25, 4:00 AM – 4:45 AM EST)

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.

[ad_2]

Source link