Primate Labs launches Geekbench AI benchmarking tool

[ad_1]

Primate Labs has officially launched Geekbench AI, a benchmarking tool designed specifically for machine learning and AI-centric workloads.

The release of Geekbench AI 1.0 marks the culmination of years of development and collaboration with customers, partners, and the AI engineering community. The benchmark, previously known as Geekbench ML during its preview phase, has been rebranded to align with industry terminology and ensure clarity about its purpose.

Geekbench AI is now available for Windows, macOS, and Linux through the Primate Labs website, as well as on the Google Play Store and Apple App Store for mobile devices.

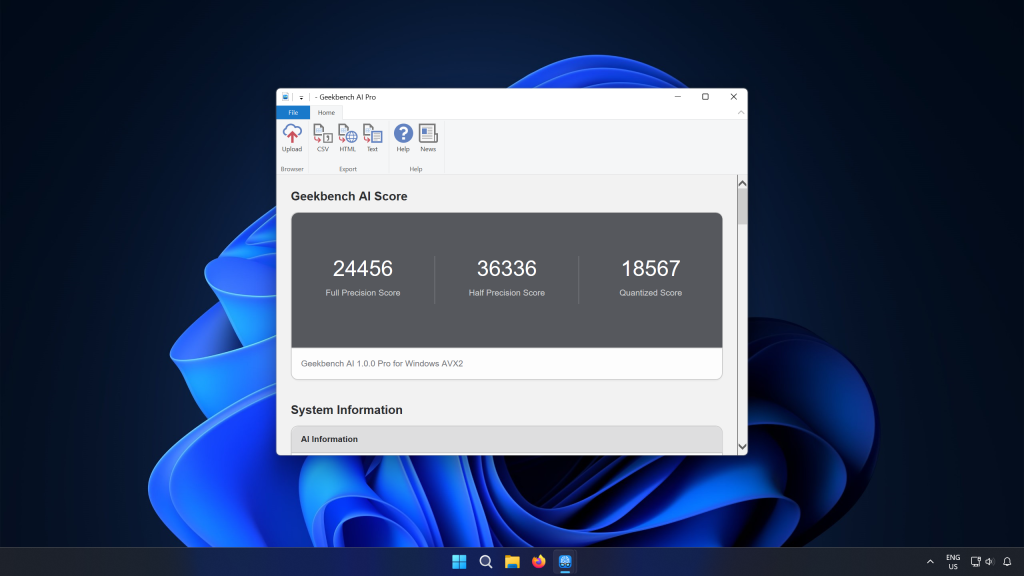

Primate Labs’ latest benchmarking tool aims to provide a standardised method for measuring and comparing AI capabilities across different platforms and architectures. The benchmark offers a unique approach by providing three overall scores, reflecting the complexity and heterogeneity of AI workloads.

“Measuring performance is, put simply, really hard,” explained Primate Labs. “That’s not because it’s hard to run an arbitrary test, but because it’s hard to determine which tests are the most important for the performance you want to measure – especially across different platforms, and particularly when everyone is doing things in subtly different ways.”

The three-score system accounts for the varied precision levels and hardware optimisations found in modern AI implementations. This multi-dimensional approach allows developers, hardware vendors, and enthusiasts to gain deeper insights into a device’s AI performance across different scenarios.

A notable addition to Geekbench AI is the inclusion of accuracy measurements for each test. This feature acknowledges that AI performance isn’t solely about speed but also about the quality of results. By combining speed and accuracy metrics, Geekbench AI provides a more holistic view of AI capabilities, helping users understand the trade-offs between performance and precision.

Geekbench AI 1.0 introduces support for a wide range of AI frameworks, including OpenVINO on Linux and Windows, and vendor-specific TensorFlow Lite delegates like Samsung ENN, ArmNN, and Qualcomm QNN on Android. This broad framework support ensures that the benchmark reflects the latest tools and methodologies used by AI developers.

The benchmark also utilises more extensive and diverse datasets, which not only enhance the accuracy evaluations but also better represent real-world AI use cases. All workloads in Geekbench AI 1.0 run for a minimum of one second, allowing devices to reach their maximum performance levels during testing while still reflecting the bursty nature of real-world applications.

Primate Labs has published detailed technical descriptions of the workloads and models used in Geekbench AI 1.0, emphasising their commitment to transparency and industry-standard testing methodologies. The benchmark is integrated with the Geekbench Browser, facilitating easy cross-platform comparisons and result sharing.

The company anticipates regular updates to Geekbench AI to keep pace with market changes and emerging AI features. However, Primate Labs believes that Geekbench AI has already reached a level of reliability that makes it suitable for integration into professional workflows, with major tech companies like Samsung and Nvidia already utilising the benchmark.

(Image Credit: Primate Labs)

See also: xAI unveils Grok-2 to challenge the AI hierarchy

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

[ad_2]

Source link