How can Informal Reasoning Improve Formal Theorem Proving? This AI Paper Introduces an AI Framework for Learning to Interleave Informal Thoughts with Steps of Formal Proving

[ad_1]

Traditional methods, relying solely on formal proof data, overlook valuable informal reasoning processes crucial to human mathematicians. The absence of natural language thought processes in formal proofs creates a significant gap between human reasoning and machine-driven proofs. Existing language models specialized for generating tactics in formal mathematics often fail to leverage the benefits of thought augmentation fully. The paper addresses the need to bridge informal human reasoning with formal proof generation, aiming to improve the efficiency and accuracy of automated theorem proving. It proposes innovative approaches to systematically integrate natural language thoughts with formal proof tactics, enhancing language models’ theorem-proving capabilities.

Previous research in learning-based theorem proving largely followed the GPT-f framework, training language models on formal proof data and utilizing best-first tree search methods. Studies explored various enhancements, including data augmentation, novel proof search methods, and retrieval augmentation. The Draft-Sketch-Prove approach, while promising in Isabelle, faced limitations in Lean due to the absence of powerful automatic proofing tools. Rationale-augmented reasoning studies demonstrated the benefits of allowing models to reason before answering. Iterative fine-tuning methods, such as Self-Taught Reasoner (STaR), showed improvements in formal theorem proving. However, the scarcity of natural language reasoning in formal proofs highlighted the need for innovative approaches to synthesize effective training examples, setting the stage for Lean-STaR.

Researchers from the Language Technologies Institute at Carnegie Mellon University and the Institute for Interdisciplinary Information Sciences at Tsinghua University have collaborated on advancing language model-based theorem proving. Their work explores innovative approaches to enhance automated reasoning in mathematics and artificial intelligence. By incorporating informal thought processes into formal proof steps, the team aims to bridge the gap between human reasoning and machine-driven theorem proving. This interdisciplinary effort combines expertise in language technologies and information sciences to push the boundaries of automated mathematical reasoning and its applications.

Lean-STaR innovates theorem proving by incorporating informal thoughts before formal proof steps, bridging the gap between informal and formal mathematics. This framework uses retrospective ground-truth tactics and expert iteration to train language models, achieving state-of-the-art results in the Lean environment. Unlike traditional approaches that focus solely on formal proof data, Lean-STaR generates natural language thoughts for each step, creating a thought-augmented dataset. This method significantly enhances theorem-proving capabilities, addressing the limitations of existing approaches that overlook informal information. By teaching language models to combine informal reasoning with formal verification, Lean-STaR advances automated theorem proving, which is crucial for mathematics and AI development.

Advanced theorem-proving techniques integrate language models with formal methods. These models, trained on proof state and tactic pairs, guide search processes. Auto Formalization bridges informal and formal mathematics, enhancing model capabilities. The Lean-STaR framework exemplifies this integration, combining informal reasoning with formal proofs in Lean. Rationale-augmented approaches boost language model performance across various domains. Bootstrapping techniques further refine model reasoning through self-generated data. These methods, particularly Lean-STaR, leverage reinforcement learning to iteratively improve the synergy between informal thought processes and rigorous formal theorem proving, advancing the field of automated reasoning.

The research aims to enhance language models’ theorem-proving abilities by integrating informal thoughts with formal verification. The proposed Lean-STaR framework teaches models to generate natural language thoughts before each proof step, improving theorem proving. It uses retrospective ground-truth tactics to create synthetic thoughts for training. The two-stage process involves generating retrospective thoughts based on human-written proofs and then fine-tuning a thought-augmented tactic predictor. By interleaving informal thoughts with formal proving, Lean-STaR bridges the gap between informal and formal mathematics, offering new insights into mathematical reasoning and automated theorem proving.

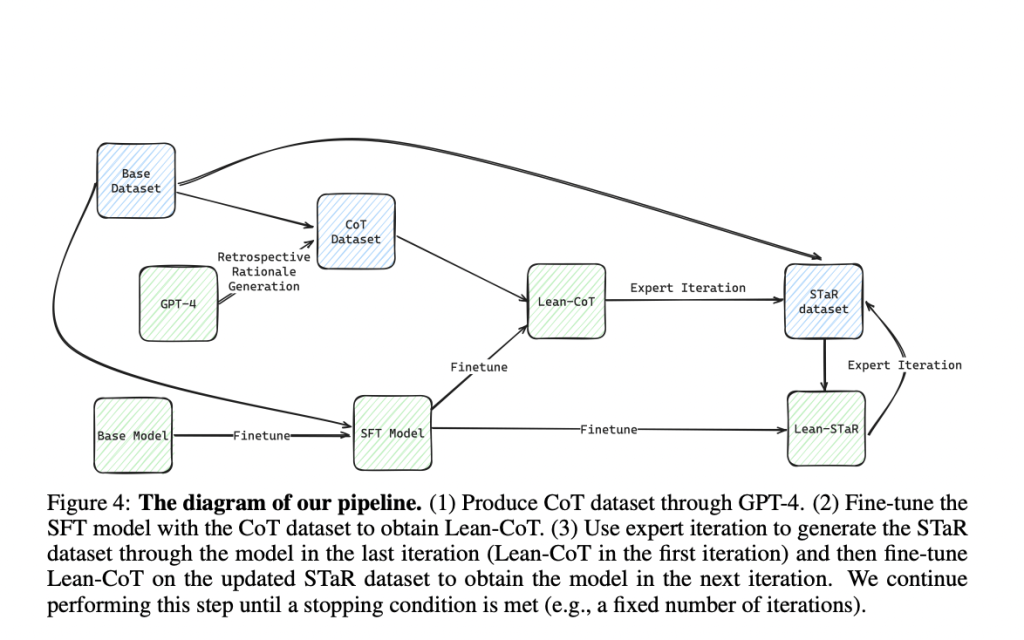

The Lean-STaR experiment involved parallel sampling (32 times, temperature 1.0) on the LeanDojo Benchmark 4 dataset, limiting tactics to 5 per problem within 1 minute. Successful (state, thought, tactic) examples were saved for training. The process collected 32,231 pairs over 4 days using 8 A100 GPUs, averaging 0.5 A100 minutes per problem. The resulting “STaR dataset” of proof examples was used to fine-tune the model. The final Lean-STaR model was created by training the SFT model for 1 epoch on a combination of GPT-4 annotated reasoning data and expert iteration data.

Lean-STaR demonstrated significant qualitative and quantitative improvements in theorem-proving capabilities. It achieved state-of-the-art results on the miniF2F-test benchmark, increasing Pass@64 from 43.4% to 46.3%. The experiment involved extensive sampling, collecting over 32,000 (proof state, thoughts, next-tactic) pairs. The model’s enhanced performance was attributed to incorporating informal thoughts and using retrospective ground-truth tactics for generating synthetic thoughts. The fine-tuning process combined GPT-4 annotated reasoning data with expert iteration data. The experiment’s computational efficiency was notable, requiring about 0.5 A100 minutes per problem and spanning 4 days using 8 A100 GPUs.

In conclusion, the study evaluates that Lean-STaR significantly enhanced language models’ theorem-proving capabilities by integrating Chain-of-Thought rationales into proof steps. The method generated synthetic rationales using ground-truth tactics, then fine-tuned models to predict tactics, creating the Lean-CoT model. Expert iteration further improved performance, achieving new state-of-the-art results on the mini F2F-test benchmark (36.1% pass rate, up from 30.3%). While offering a scalable framework for advancing mathematical understanding, limitations included computational scalability, potential biases from GPT-4-generated data, and bottlenecks due to Lean ITP’s speed. Despite these challenges, Lean-STaR demonstrated the effectiveness of integrating informal thoughts into formal proof generation.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

Find Upcoming AI Webinars here

Shoaib Nazir is a consulting intern at MarktechPost and has completed his M.Tech dual degree from the Indian Institute of Technology (IIT), Kharagpur. With a strong passion for Data Science, he is particularly interested in the diverse applications of artificial intelligence across various domains. Shoaib is driven by a desire to explore the latest technological advancements and their practical implications in everyday life. His enthusiasm for innovation and real-world problem-solving fuels his continuous learning and contribution to the field of AI

[ad_2]

Source link