Microsoft Researchers Developed SheetCompressor: An Innovative Encoding Artificial Intelligence Framework that Compresses Spreadsheets Effectively for LLMs

Spreadsheet analysis is essential for managing and interpreting data within extensive, flexible, two-dimensional grids used in tools like Microsoft Excel and Google Sheets. These grids include various formatting and complex structures, which pose significant challenges for data analysis and intelligent user interaction. The goal is to enhance models’ understanding and reasoning capabilities when dealing with such intricate data formats. Researchers have long sought methods to improve the efficiency and accuracy of large language models (LLMs) in this domain.

The primary challenge in spreadsheet analysis is the large, complex grids that often exceed the token limits of LLMs. These grids contain numerous rows and columns with diverse formatting options, making it difficult for models to process and extract meaningful information efficiently. Traditional methods are hampered by the size and complexity of the data, which degrades performance as the spreadsheet size increases. Researchers must find ways to compress and simplify these large datasets while maintaining critical structural and contextual information.

Existing methods to encode spreadsheets for LLMs often need to be revised. Token constraints limit simple serialization methods that include cell addresses, values, and formats and fail to preserve the structural and layout information critical for understanding spreadsheets. This inefficiency necessitates innovative solutions that can handle larger datasets effectively while maintaining the integrity of the data.

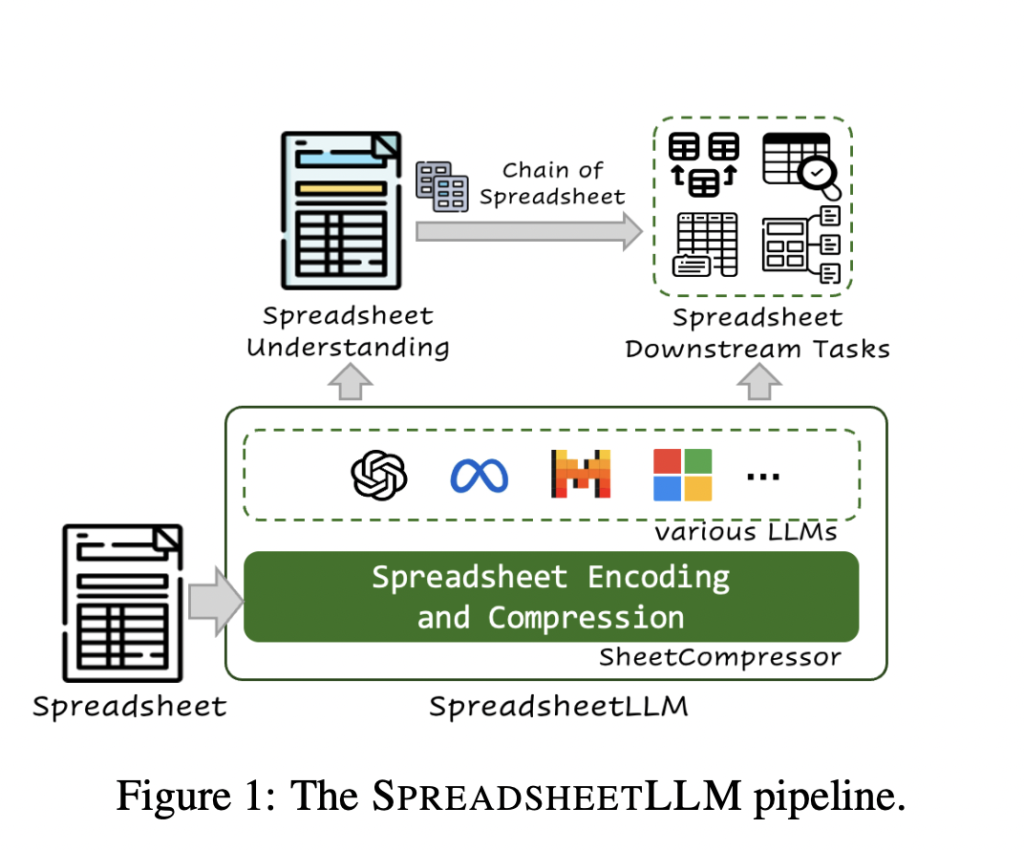

Researchers at Microsoft Corporation introduced SPREADSHEETLLM, a pioneering framework designed to enhance the capabilities of LLMs in spreadsheet understanding and reasoning. This method utilizes an innovative encoding framework called SHEETCOMPRESSOR. The framework comprises three main modules: structural-anchor-based compression, inverse index translation, and data-format-aware aggregation. These modules collectively improve the encoding and compression of spreadsheets, allowing LLMs to process them more efficiently and effectively.

The SHEETCOMPRESSOR framework begins with structural-anchor-based compression. This method identifies heterogeneous rows and columns crucial for understanding the spreadsheet’s layout. Large spreadsheets often contain numerous homogeneous rows or columns, which contribute minimally to understanding the design. By identifying and focusing on structural anchors—heterogeneous rows and columns at table boundaries—the framework creates a condensed “skeleton” version of the spreadsheet, significantly reducing its size while preserving essential structural information.

The second module, inverted-index translation, addresses the inefficiency of traditional row-by-row and column-by-column serialization, which is token-consuming, especially with numerous empty cells and repetitive values. This method uses a lossless inverted-index translation in JSON format, creating a dictionary that indexes non-empty cell texts and merges addresses with identical text. This optimization significantly reduces token usage while preserving data integrity.

The final module, data-format-aware aggregation, further enhances efficiency by clustering adjacent numerical cells with similar formats. Recognizing that exact numerical values are less critical for understanding the spreadsheet’s structure; this method extracts number format strings and data types, clustering cells with the same formats or types. This technique streamlines the understanding of numerical data distribution without excessive token expenditure.

In tests, SHEETCOMPRESSOR significantly reduced token usage for spreadsheet encoding by 96%. The framework demonstrated exceptional performance in spreadsheet table detection, a foundational task for spreadsheet understanding, surpassing the previous state-of-the-art method by 12.3%. Specifically, it achieved an F1 score of 78.9%, a notable improvement over existing models. This enhanced performance is particularly evident in handling larger spreadsheets, where traditional methods struggle due to token limits.

SPREADSHEETLLM’s fine-tuned models showed impressive results across various tasks. For instance, the framework’s compression ratio reached 25×, substantially reducing computational load and enabling practical applications on large datasets. In a representative spreadsheet QA task, the model outperformed existing methods, validating the effectiveness of its approach. The Chain of Spreadsheet (CoS) methodology, inspired by the Chain of Thought framework, decomposes spreadsheet reasoning into a table detection-match-reasoning pipeline, significantly improving performance in table QA tasks.

In conclusion, SPREADSHEETLLM represents a significant advancement in the processing and understanding spreadsheet data using LLMs. The innovative SHEETCOMPRESSOR framework effectively addresses the challenges posed by spreadsheet size, diversity, and complexity, achieving substantial reductions in token usage and computational costs. This advancement enables practical applications on large datasets and enhances the performance of LLMs in spreadsheet understanding tasks. By leveraging innovative compression techniques, SPREADSHEETLLM sets a new standard in the field, paving the way for more advanced and intelligent data management tools.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.